[YOLOv7](https://github.com/WongKinYiu/yolov7) was a success last year, and now we have a [YOLOv8](https://github.com/ultralytics/ultralytics) developed by Ultralytics. In this article, I will show how you can make an inference on pretrained weight and retrain YOLOv8 for a custom dataset.

Step 1: Pip install Ultralytics package using (pip install ultralytics)

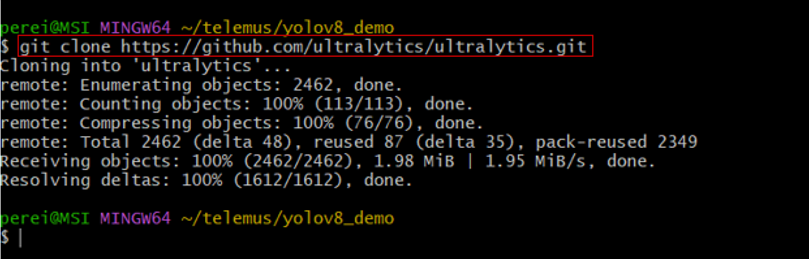

Step 2: Clone the ultralytics repo (git clone https://github.com/ultralytics/ultralytics.git)

Step 3: Install all dependencies using requirements.txt from the cloned repository

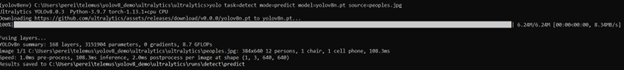

Step 4: Since our task now is detection, we keep task = "detect," and we keep mode = "predict." Since we have not trained any model, we will use a pretrained weight, that is, yolov8n.pt. You don't have to separately download the weights; it will do it automatically.

yolo task=detect mode=predict model=yolov8n.pt source=peoples.jpg

Step 5: Training on custom datasets

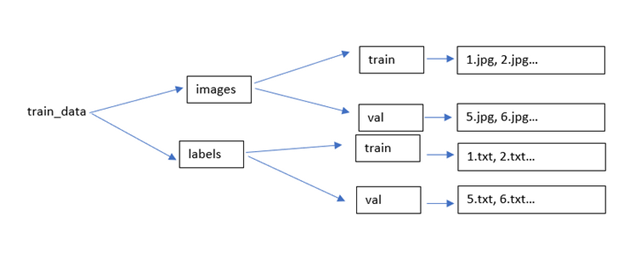

I kept the datasets in the following format

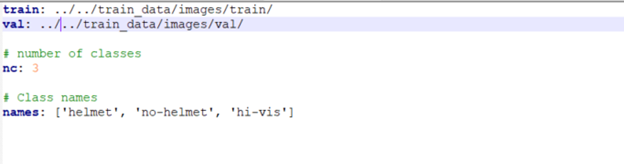

Create a custom_data.yaml file for datasets directory configuration. In this task I will be detecting safety helmet of construction workers. and there are 3 classes that is, helmet, no-helmet and hi-vis.

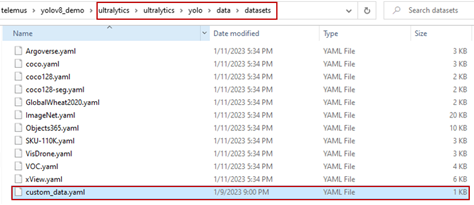

Move the custom_data.yaml in yolo’s dataset folder yolo->data->datasets

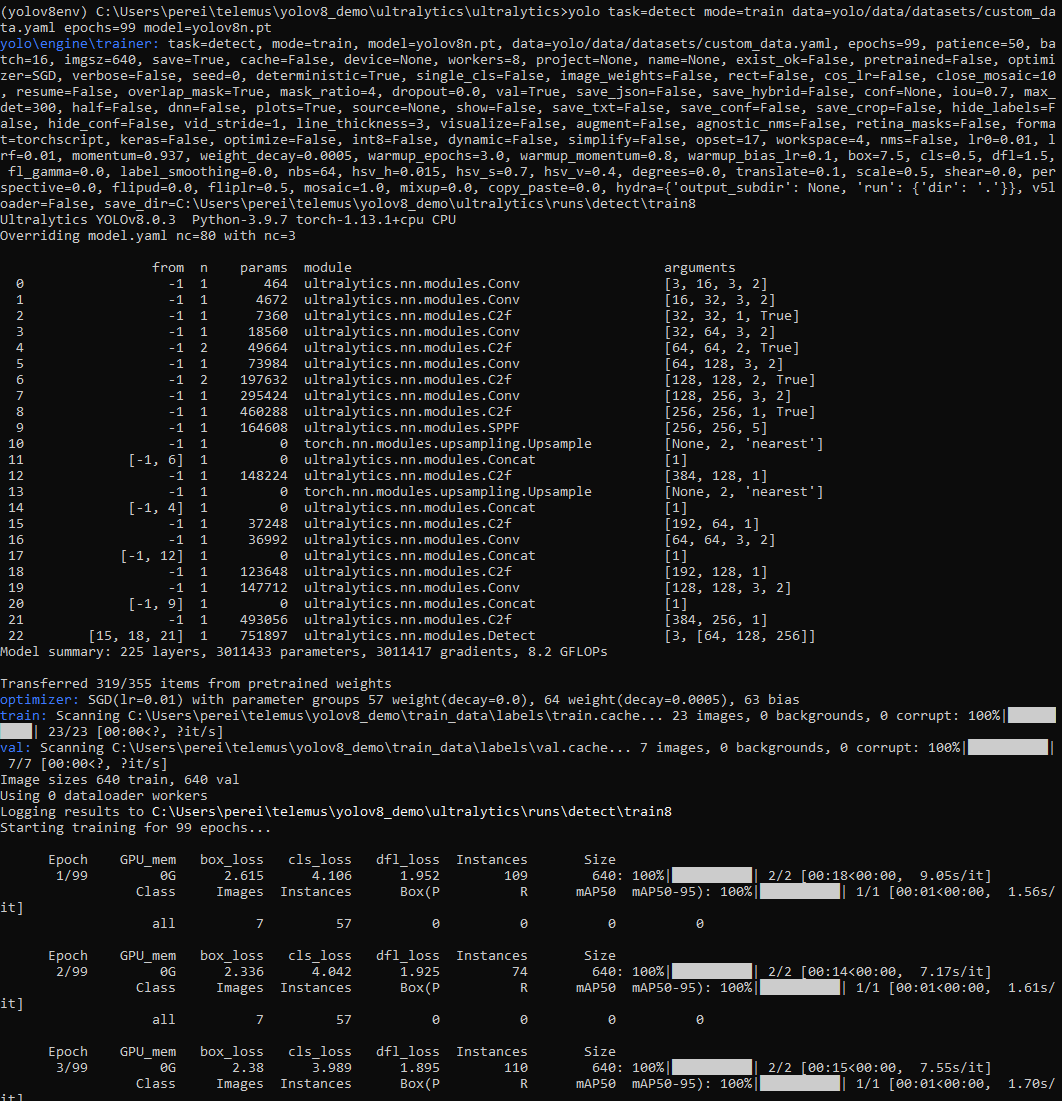

Since we want to train the model and our task now is detection, we keep task = "detect," and we keep mode = "train". We will use 100 epochs to train the model and pass the path of custom_data.yaml for datasets.

yolo task=detect mode=train data=yolo/data/datasets/custom_data.yaml epochs=100 model=yolov8n.pt

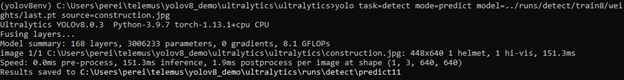

Step 6: After training we will test on new image. The output will be in predict folder in “runs\detect\”. (Here we should pass the path of our new weights for detection)

We can use the same steps for detecting object in videos by passing video path in source.

yolo task=detect mode=predict model=../runs/detect/train8/weights/last.pt source=construction.mp4

I hope this article on using YOLOv8 was useful and you can reach out to me through [LinkedIn](https://www.linkedin.com/in/melroy-pereira/).

Cheers

[Melroy Pereira](https://www.linkedin.com/in/melroy-pereira/)